After some experience with belts (see a previous post), we have changed the

go language to provide the functionality we were missing from their channel abstraction. We refer to the modified environment as Lsub Go.

NB: I'm editing this post to reflect the new interface used after some discussion and reading the comments.

The modified compiler and runtime can be retrieved with

git clone git://git.lsub.org/golang.git

This required changes to both the compiler and the run-time.

We also tried to keep the core language as undisturbed as feasible yet be able to track the changes made.

- The builtin close can be called now also on a chan<- data type (a channel to send things through). This won't cause a panic as it did anymore.

- A variant, close(chan, interface{}) has been added to both close a channel (for sending or receiving) and report the cause for the closing. close(c) is equivalent to cclose(c, nil)

- A new builtin cerror(chan) error has been added to report the cause for a closed channel (or nil or is not closed or was closed without indicating the reason)

- The primitive send operation now returns a bool value indicating if the send could be made or not (the channel might be closed). Thus, ok := c <- 0 now indicates if we could send or the channel was closed and we couldn't send anymore.

Not directly related to this change, but because we already changed the language and because we missed this construct a lot...

- A new doselect construct has been added, which is equivalent to a for loop with a single select construct as the body. It is a looping select.

The doselect construct is very convenient. Many times a process would just loop serving a set of channels, and

- There is no actual reason for having to indent twice (the loop and the select)

- It would be desirable to be able to break the service loop directly or to continue with the next request.

For example:

doselect {

case x, ok := <-c1:

if !ok {

...

break

}

...

case x, ok := <-c2:

}

default:

...

}

Here, the break does break the entire loop and there is no need to add a label to the loop implied by the construct.

Also, continue would continue with the next request selected in the loop.

But this is just a convenience, and not really a change that would make a difference.

Now, the important change is to be able to

for x := range inc {

dosomethingto(x)

if ok := outc <- x; !ok {

close(inc, "not interested in your stream")

break

}

}

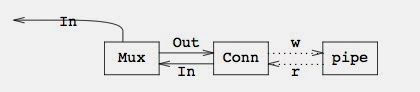

In this example, we loop receiving elements from an input channel, inc, and after some processing, send the output to another process through outc. Now, if somehow that process is no longer interested in the stream we are piping to it, there is no point in

- forcing it to drain the entire stream, or

- forcing our interface to have another different channel to report the error, or

- ignoring the sender and leaving it forever blocked trying to send

Instead, the receiver of outc might just close(outc) to indicate to any sender that nobody will ever be interested in more elements sent through there.

After that, our attempt to send would return false (recorded in ok in this example), and we can check that value to stop sending.

Furthermore, because we are sending data received from an input channel, inc, we can also tell the sender feeding us data that we are done, and also use close to report why.

Thus, an entire pipeline of processes can cleanly shut down and stop when it is necessary to do so. The same could have been done recovering from panics, but this is not really a panic, it is not really that different from detecting EOF when reading and should be processed by normal error checking code and not by panic/recover tricks. Moreover, such tricks would be inconvenient if the function is doing more than just sending through the channel, because a recover does not continue with the execution of the panicking function.

To continue with the motivating example, this code could report to the sender the possible cause that did lead to the closing of outc, by doing something like:

Unlike before, this time the actual cause is reported through the pipeline of processes involved and anyone might now issue a reasonable error diagnostic more useful than "the channel was closed".

The modified compiler and runtime can be retrieved with

git clone git://git.lsub.org/golang.git

The repository there contains a full go distribution as retrieved from the Go mercurial, modified a few times to make these changes. The compiler packages from the standard library (used by go fmt among other things) are also updated to reflect the new changes.

To reduce the changes required, we did not modify the select cases to let you send with the

ok := outc <- x construct. Thus, within select you can only try to send and it would just fail to send on a closed channel, but would not panic nor would it break your construct. You should probably check in the code that the send could be done if you have to do so. We might modify further the Lsub version of go to let select handle this new case, but haven't done it yet.