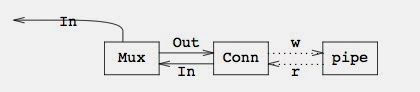

This is the third post on a series of posts describing what we did as part of the ongoing effort to build a new OS, which we just named as "Clive". The previous two posts described how channels were used and bridged to the outside pipes and connections, and how they where multiplexed to make it easy to write protocols in a CSP style.

This post describes what we did regarding file trees and name spaces. This won't be a surprise considering that we come from the Plan 9, Inferno, Plan B, NIX, and Octopus heritage.

But first things last. Yes, last. A name space is separated from the conventional file tree abstraction. That is, name spaces exist on their own and map prefixes to directory entries. A name space is searched using this interface

type Finder interface {

Find(path, pred string, depth0 int) <-chan zx.Dir

}

The only operation lets the caller find a stream of matching directory entries for the request.

Directory entries are within the subtree rooted at the given path, and must match the supplied predicate.

A predicate here is a very powerful thing, inspired by the unix command named before our operation.

For example, the predicate

~ name "*.[ch]" & depth < 3

can be used to find files with names matching C source file names but no deeper than three

levels counting from the path given.

It is also important to notice that the results are delivered through a channel. This means that the operation may be issued, reach a name space at the other end of the network, and the caller may just retrieve the stream of replies when desired (or pass the stream to another process).

Things like removing all object files within a subtree can now be done in a couple of calls. One to find the stream of entries, and another to convert that stream into a stream of remove requests.

And here is where the first thing (deferred until now) comes. The interface for a file tree to be used across the network relies on channels (as promises) to retrieve the result of each operation requested. Furthermore, those channels are buffered in many cases (eg., on all error indications) and might be even ignored if the caller is going to checkout later the status of the entire request in some other way.

Thus, we can actually stream the series of removes in this example. Note that the call to remove simply issues the call and returns a channel that can be used to receive the reply later on.

oc := ns.Find("/a/b", "~ name *.[ch] & depth < 3", 0)

for d := range oc {

fs.Remove(d["path"]) // and ignore the error channel

}

This is just an example. A realistic case would not ignore the error channel returned by

the call to remove. It would probably defer the check until later, or pass the channel to another process checking out that removes were made.

Nevertheless, the example gives a glance of the power of file system interfaces used in Clive.

Reading and Writing is also made relying on input and output channels.

If you are interested in more details, you can read

zx, which describes the file system and name space interfaces, and perhaps also

nchan, which describes CSP like tools used in Clive and important to understand how these interfaces are used in practice.